My clients often wonder which Oracle tool they should use for reporting, building graphs, and generating dashboards. It’s a great question because, as anyone in this realm knows, there are many different options. This blog will touch on the three reporting tools I’m familiar with: Financial Reporting Studio (FRS), Web Analysis (WA), and Oracle Business Intelligence Answers (OBI).

One caveat here is that the bulk of my experience comes from the Oracle Hyperion side, so I have much more familiarity with FRS and WA, and definitely less so with OBI. So, this blog will be written from that perspective.

Below, I’ll give a synopsis of what each tool does, based on my experience, followed by my opinion of when each should be used.

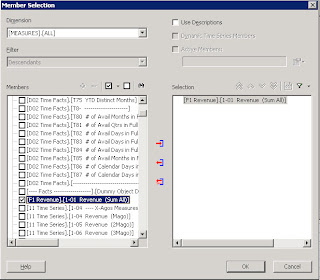

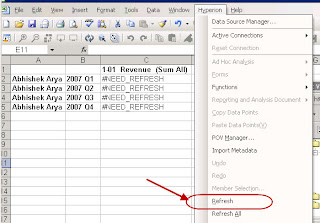

Financial Reporting StudioFRS is a great tool for producing regular reports, like a monthly reporting package. It allows you to create production level reports with a multitude of formatting options. FRS reports can be viewed in PDF or HTML format, from a client component or over the web. Reports can be gathered together in Books and batch scheduled to run at the frequency of your choosing, and then saved to a particular location or emailed out to a list of users.

FRS is not a tool for producing dashboards, is limited in its chart and graph functionality, and would not generally be used for ad-hoc reporting.

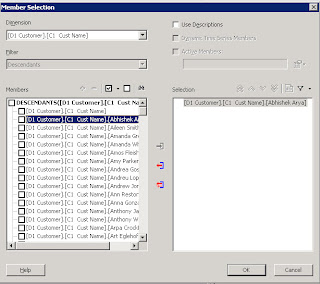

Web AnalysisWA comes from the Hyperion suite of products as the tool for building dashboards. In my experience, it’s most often used to create quick snapshots of data for management or executive level users. WA allows you to create multi-view looks at your critical business metrics, either in graphical or grid format. For example, a dashboard might include a line graph of sales by region in one quadrant, a bar chart of sales by VP in another quadrant, a pie chart of expenses by category, and a grid showing spending by department. WA includes traffic lighting as a feature, allowing you to highlight or color significant variances of data.

WA would not be used for production reporting.

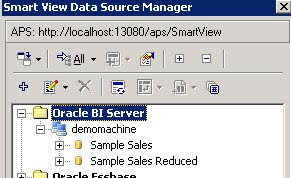

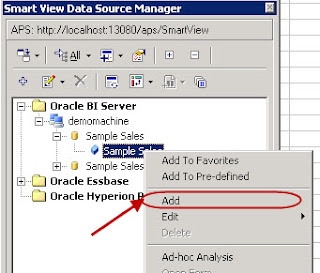

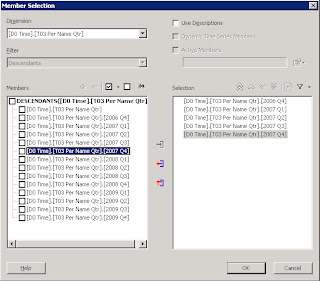

Oracle BI AnswersOBI Answers is a tool for building both reports and dashboards from a variety of sources including relational and multi-dimensional databases. You can build similar dashboards to what WA offers and publish reports similar to what FRS offers. The entire OBI suite has pre-built modules by industry that allow for easier implementation depending on the particular business case.

While OBI Answers can use Oracle Essbase as a data source, there are some issues in doing so that prohibit the use when a particular hierarchy exists. This should be addressed in an upcoming release, but at this point, the OBI link to the Oracle Hyperion suite of products is very limited.

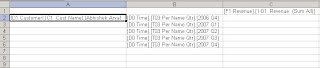

Which Tool?So, which reporting tool should be used when? With the current releases and functionality available, here is how I would use them:

FRS – Use for regular production reporting such as income statements, operating expenses, headcount, and any other meaningful financial metrics from an Oracle Hyperion data source such as Essbase, Planning, or Financial Management. I generally don’t create charts and graphs using FRS because the options and functionality are fairly limited. But, if you have fairly straightforward and simple chart requirements, then FRS should work fine for you.

WA – I would use Web Analysis for producing grid and chart dashboards from an Oracle Hyperion data source. WA does a good job of incorporating “bells and whistles” that make a dashboard “pop”, providing important metrics quickly.

OBI Answers – OBI is a great tool to use to quickly build reports and dashboards from a data warehouse. In my opinion, this is the easiest tool to learn and use of the three. As I mentioned above, I don’t think it’s currently the right tool to use with Oracle Hyperion data sources, but that very well could change in the near future.

Going ForwardIn future releases, I think that OBI will become the tool of choice for reporting and dashboarding, even for Oracle Hyperion data sources. Oracle is very good at creating synergies between their product lines, and while each individual application generally has their own set of tools, eventually, they converge to similar toolset technologies. I think as OBI gets more integrated with the Oracle Hyperion suite of products, it will become the tool of choice for reporting. In my opinion, it is easier to both learn and use compared to both Financial Reporting Studio and Web Analysis.

6. Test it

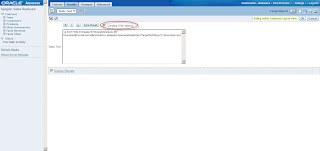

6. Test it If you are manually typing it in or providing it through a column value, don't forget to replace spaces, quotes, and other special characters with their HTML equivalents.

If you are manually typing it in or providing it through a column value, don't forget to replace spaces, quotes, and other special characters with their HTML equivalents.